"Bedlam" Technique for using LLMs with BDD

BDD + LLM = Bedlam

Bedlam (noun): Chaos, uproar, confusion.

In our context: BDD + LLM = Bedlam. The controlled chaos of coding with an LLM, but within the rigid constraints of Behavior-Driven Development.

BDD has been around for a while, first in Java with Cucumber, and then C# using SpecFlow (now Reqnroll). I’ve forced it on my teams with various degrees of success over the years. The difference this time is that AI genuinely speeds things up. We get the specs without having to write them, and that’s easier for devs to accept. If we want to avoid vibe-coding and be more controlled with out AI efforts, then having specs as context is the way.

Constraints and Context

You can’t trust LLMs to run wild. Just like airplanes are too complex for any one pilot to maintenance from memory alone, they need checklists. Modern software features are too complex for any one developer to hold in their head, or for an LLM to wing it without structure.

Saying “don’t use AI” is no more an answer than telling people not to fly complex aircraft. The solution lies in using checklists: lists of features and the behaviors associated with them. By employing these lists, we guide the LLM to accomplish specific tasks effectively.

BDD gave us that structure decades ago. Gherkin features are human-readable contracts. They’re testable. They’re versioned. They live with the code in the repo. But just like test, they are onerous to create and maintain. The LLM makes it feasible to write them without developers revolting.

How It Actually Works

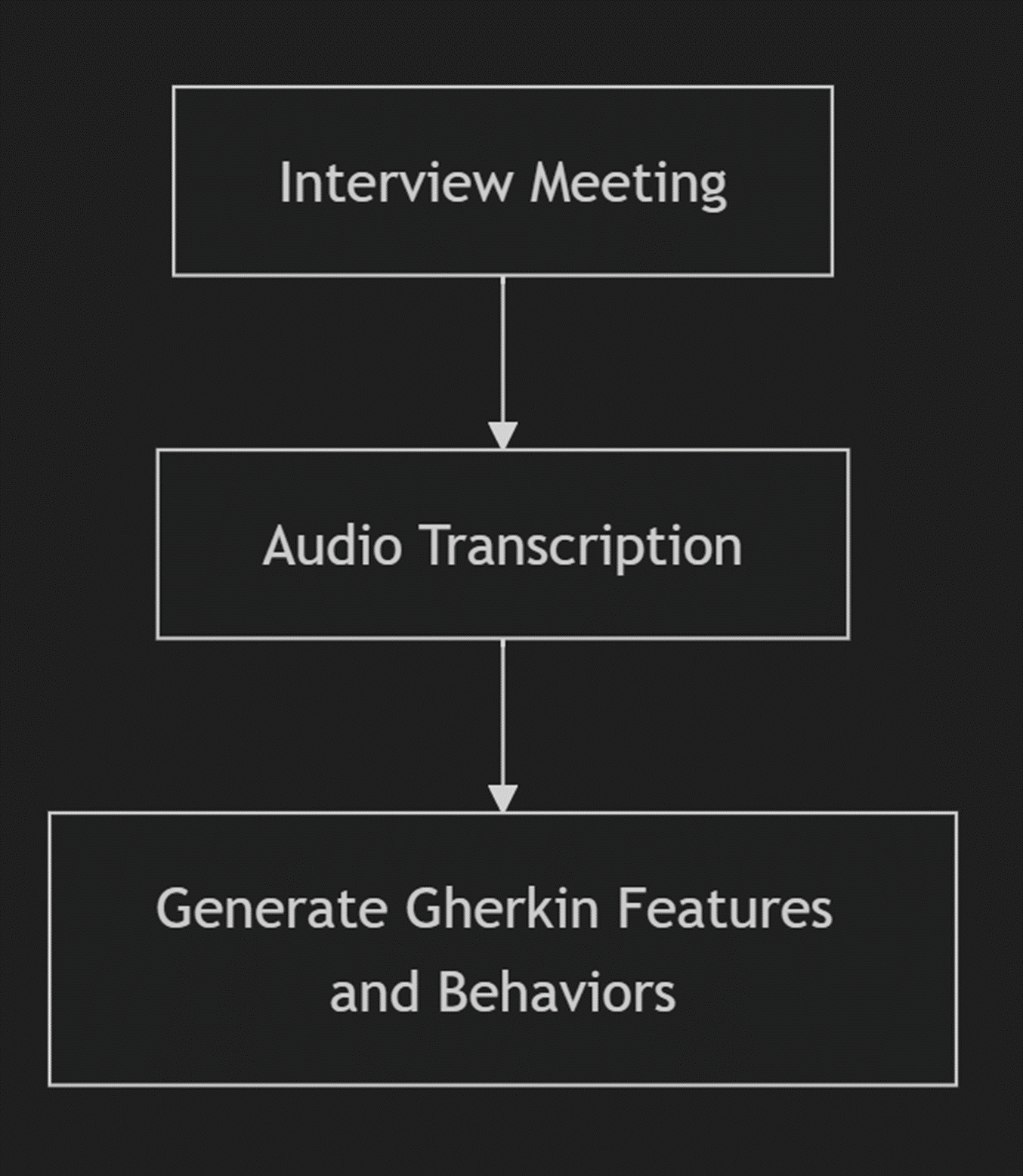

This is the first two steps in the ICECAP process. I’ll post more about that process as we go.

Interview the stakeholders (that’s the “I” in ICECAP), transcribe the audio, generate Gherkin features and behaviors from the transcript.

After the Interview, we codify the specs (that’s the “C” in ICECAP) as follows:

Old Human Tasks: Take notes in the meeting, mentally extract features, dream up individual behaviors for each feature. Spend the rest of the day typing.

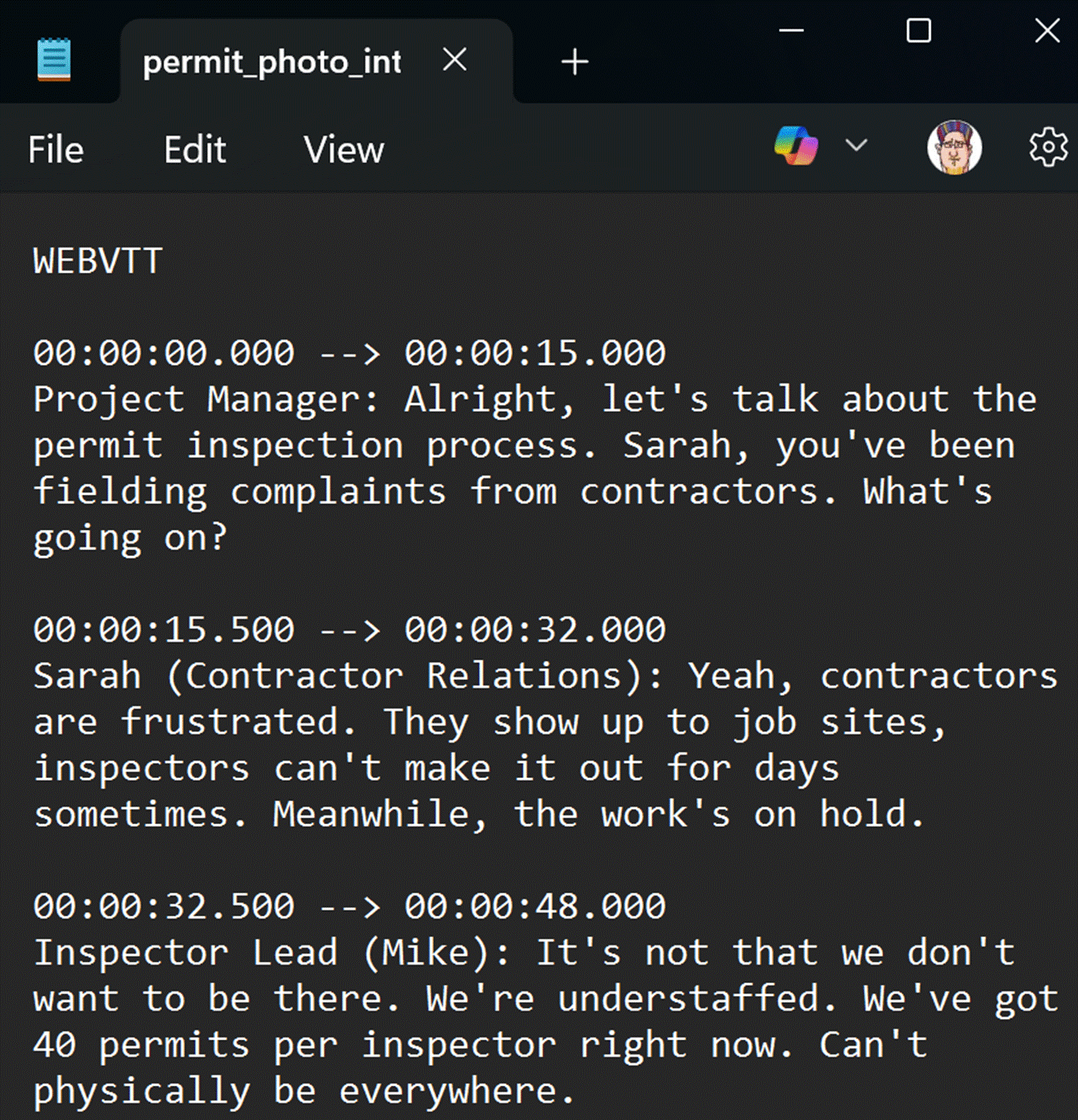

New Human Tasks: Record the meeting, generate a transcription as VTT file (adds timestamps). Note: this is different from an AI generated meeting summary.

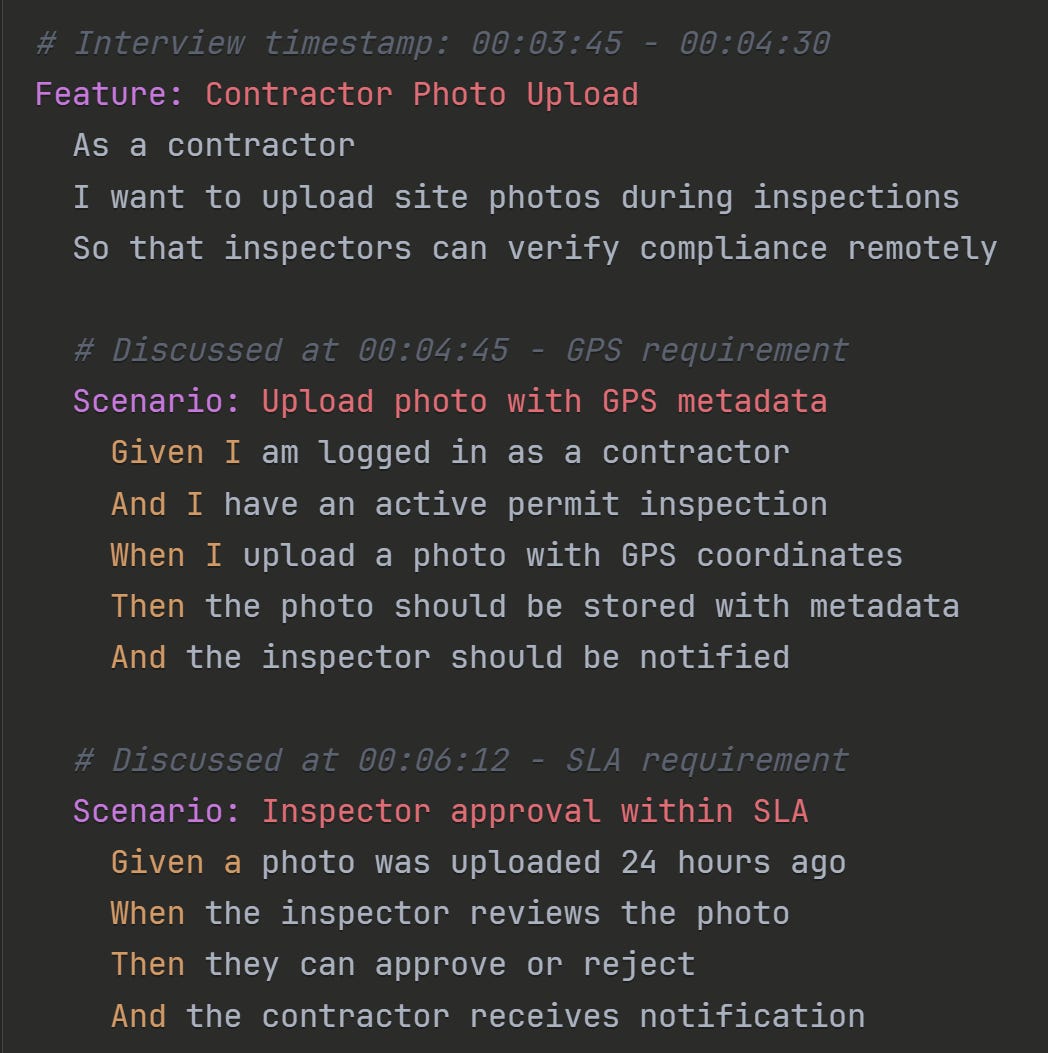

LLM Prompt: “Generate BDD features and behaviors from this meeting transcript. Include the timestamps in the comments for each.”

The timestamp is an added bonus if you ever want to review what was actually said. LLMs make lots of mistakes, and point back to the ground-truth is always smart.

Nothing revolutionary. Nothing you couldn’t do manually. But here’s what changes: we actually do it. The LLM removes the friction that made developers skip the BDD part.

A Real Example

County needs contractors to upload site photos during permit inspections. Photos need GPS metadata. Inspectors approve or reject within 48 hours. Standard government work.

The interview generates this kind of transcript:

Feed that to an LLM, get Gherkin features that live in the repo alongside the code. Push this up to your repo.

Why This Actually Works

Traditional BDD failed because nobody wanted to write Gherkin. It was tedious busywork that got skipped. The code and tests drifted from the specs. The specs collected dust.

LLMs make Gherkin free. More than free—they make it the most reliable artifact because everything else generates from it. The features stay in sync because they’re the source.

The constraint is the value. By forcing the LLM into BDD structure, we get testable requirements, living documentation. The LLM can’t wander off into creative nonsense because it’s boxed in by a proven methodology.

The Learning Loop

Strategic doing taught us that action plans aren’t enough; you need a learning loop. This workflow builds that in. Every meeting is a hypothesis about what the system should do. The features document what we learned.

The Bigger Picture

We didn’t generate any code here, but we’ll do so next time. I’ll make a video using this method and move forward with code generation. Having specs and code in the same repo lets you track changes together in one PR, showing the reasons for updates.